A leading firm is actively developing advanced artificial intelligence (AI) to enable robots to recognize, understand, and appropriately respond to human emotions, thereby significantly bridging the communication gap between humans and machines.

Robots are increasingly integrating into various aspects of human life, serving as invaluable assistants and companions across diverse fields. Their immense potential is being harnessed for critical applications, including roles in the food industry, providing essential manual assistance, and many more. To enhance seamless human-robot interaction, these machines are now being equipped with sophisticated artificial intelligence capabilities. This integration allows robots to effectively grasp human emotions and leverage that understanding for a wide range of functions—from daily interactions to strategic business operations, impactful marketing campaigns, and even critical healthcare support. This article delves into the fascinating world of emotional AI in robotics, explaining the methodologies and profound implications behind this innovation.

Understanding Human Emotions: The Future of AI Robots

Groundbreaking research reveals that humans instinctively recognize emotions such as stress, aggression, and excitement in others purely by observing their body movements, even without visual cues of facial expressions or auditory input like voice. This innate human ability to decipher emotional states from movement patterns, distinct from facial cues or vocal tones, presents a unique opportunity. This knowledge can now be effectively taught to advanced robots, enabling them to learn and comprehend human emotions. This specialized discipline focuses on this very study, known as “Emotional Intelligence” or Affective Computing.

The concept of Emotional Intelligence within machines, often termed Affective Computing, traces its roots to 1995. This was when MIT Media Lab professor Rosalind Picard pioneered the field by publishing her seminal work, “Affective Computing.”

As Professor Picard elucidates, “Just like we can understand speech and machines can communicate in speech, we also understand and communicate with humor and other kinds of emotions. And machines that can speak that language — the language of emotions — are going to have better, more effective interactions with us. It’s great that we’ve made some progress; it’s just something that wasn’t an option 20 or 30 years ago, and now it’s on the table.”

Indeed, a core objective in the evolving field of human-robot interaction is the development of intelligent machines capable of accurately recognizing human emotional states and providing appropriate, empathetic responses.

Further Reading: A Brief Overview of Robotics

Insights from the Research Study

To effectively teach robots the spectrum of human emotions, it’s crucial to first analyze how people move and interact when experiencing different emotional states. A pivotal study, led by researchers from Warwick Business School, the University of Plymouth, the Donders Centre for Cognition at Radboud University in the Netherlands, and the Bristol Robotics Lab at the University of the West of England, observed children engaged in play with a robot and a touch-screen computer integrated into a table.

This recorded footage was then presented to a diverse team of 284 psychologists and computer scientists. Their task was to meticulously analyze the children’s interactions, categorizing them as cooperative, competing, or dominant, and identifying expressed emotions such as excitement, boredom, or sadness.

Remarkably, a separate group of analysts viewed the same video, but reduced to simple ‘stickman’ figures, isolating only the movements. Their findings perfectly mirrored the initial group’s observations, confirming that children convey universally recognizable emotional labels through their movements—not as mere guesses, but as clear, identifiable expressions.

Explore More: Latest Robotic Innovations That Are Revolutionizing the World

Following this insightful analysis, the researchers developed and trained a sophisticated machine-learning algorithm. This algorithm was designed to accurately label the video clips, pinpointing specific social interactions, the emotions evident in each scenario, and even the intensity of each child’s internal state. This advanced capability allowed the algorithm to discern, for example, which child exhibited greater sadness or excitement.

The study’s conclusions are promising: “Our results suggest it is reasonable to expect a machine learning algorithm, and consequently a robot, to recognise a range of emotions and social interactions using movements, poses, and facial expressions. The potential applications are huge.”

Ultimately, the overarching goal of this research is to engineer robots that can intelligently react to complex human emotions in challenging environments. This would enable them to autonomously navigate difficult situations and resolve issues without requiring constant human oversight or explicit instructions, marking a significant leap in robotic autonomy.

Related Articles

Pioneering Emotional AI Robots Available Today

Forward-thinking companies are now integrating Emotion AI robots into critical sectors like marketing, business, and healthcare. Achieving truly nuanced human-robot interaction necessitates full-spectrum emotion AI, a primary objective for organizations such as Affectiva. This company has meticulously trained its algorithms on an extensive dataset of over nine million faces from diverse global populations, enabling their technology to accurately detect seven core emotions: anger, contempt, disgust, fear, surprise, sadness, and joy. Affectiva primarily focuses on enhancing marketing strategies by analyzing consumer reactions to advertisements, thereby partnering with firms to create more effective and resonant campaigns.

In the healthcare sphere, Expper Tech’s innovative ‘Robin’ robot has been deployed in hospitals, specifically designed to offer engaging and emotionally supportive companionship to children undergoing medical treatment.

Considering the projected elderly population of 1.6 billion by 2050—approximately 17% of the global population and double today’s proportion—the need for consistent support and care becomes paramount. Emotionally intelligent robots are poised to fulfill this vital purpose, providing much-needed assistance and companionship.

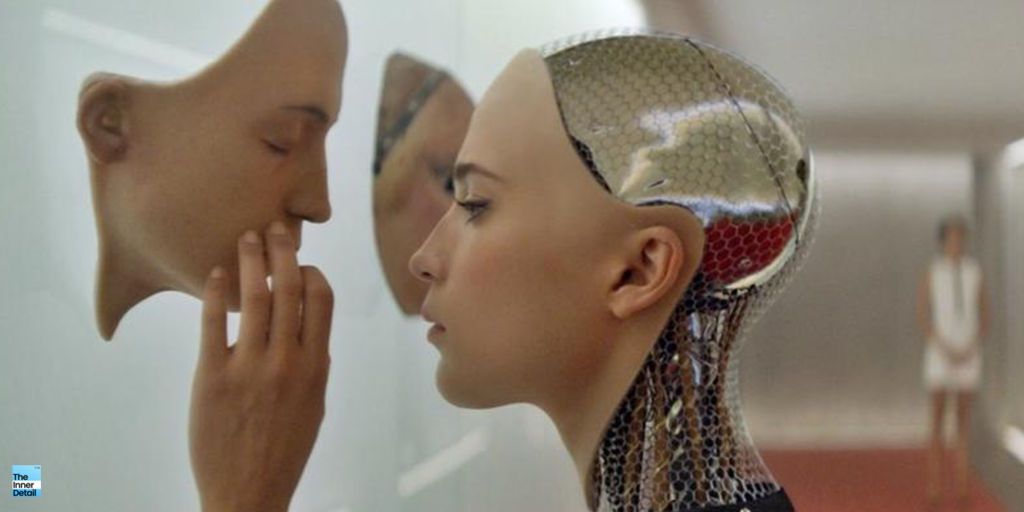

While concerns about manipulating emotional robots for self-serving purposes exist, their profound capabilities for effective functioning far outweigh such risks, propelling Emotion AI to the forefront of rapidly advancing technologies. Ongoing advancements in robotics are not only enabling machines to read and comprehend human emotions but also to realistically exhibit these emotions on their robotic faces, as impressively demonstrated by the advanced robot Ameca.

We hope you found this page informative and insightful! We encourage you to share your thoughts on this exciting robotic innovation in the comments section below.

(For more engaging content on informational topics, cutting-edge technology, and innovative breakthroughs, continue exploring The Inner Detail).

References:

- https://techxplore.com/news/2019-10-robots-recognise-human-emotions.html

- https://www.discovery.com/science/emotional-robots–machines-that-recognize-human-feelings

- https://mitsloan.mit.edu/ideas-made-to-matter/emotion-ai-explained

Join our community by subscribing to our Weekly Newsletter to stay updated on the latest AI updates and technologies, including the tips and how-to guides. (Also, follow us on Instagram (@inner_detail) for more updates in your feed).

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).